In case you've been living under a rock, Leetcode-style interviews have dominated the software industry for at least a decade now. From smaller companies all the way to the tech giants, interviews have been centered around these puzzle-type questions designed to probe a candidate's logic, understanding of algorithms and knowledge of data structures. I'm not going to spend time outlining the merits and drawbacks of this style of interview. Rather, this article is intended to be an early alarm system to start rethinking how we screen talent.

If your company is relying on these types of questions for interviews, your process should be considered inherently flawed and destruction imminent. Astronomers have spotted a meteor heading straight for your company, and it's going to be an apocalyptic event. So, let's start prophesying!

The Darkening Skies

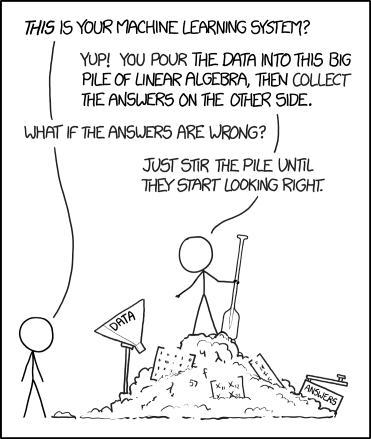

Conditions around this event have been looming for a while. Although the forecast wasn't clear, in hindsight it was the perfect storm. Yes, I'm having fun with these puns. Back to business, the first flaw in this style of interviewing is because the nature of these interviews is fairly constrained. There is some well-defined input, and some well-defined output. We expect the candidate to create some code which satisfies the well-defined test cases. This was a benefit at first, given the relatively short period of time that an interview takes place.

But this gives way to the second Sign of the End Times for this style of interviewing; the ability to brute force (pun intended) through the process. Over the past decade, we've seen an entire industry pop up around this style of interviewing. Websites such as Leetcode make huge databases of problems easily accessible. Books like Cracking the Coding Interview coach people on how to navigate through them. There are entire paid training courses that promise to take candidates from zero to hero. It's obvious that these styles of interviews are not testing fundamental problem solving and coding skills, but rather simply testing who practiced the most.

Lastly came the shift to remote-friendly workplaces. This, by nature, also included shifting to remote-friendly interviews. For most considerations, the ability to be flexible in your work environment is a huge boon to individual productivity. And a huge boon to talent recruitment. But for the Leetcode-style interviews, it opens the door to fraud. While the danger was subtle at first, it's now been realized.

The Flashing Light

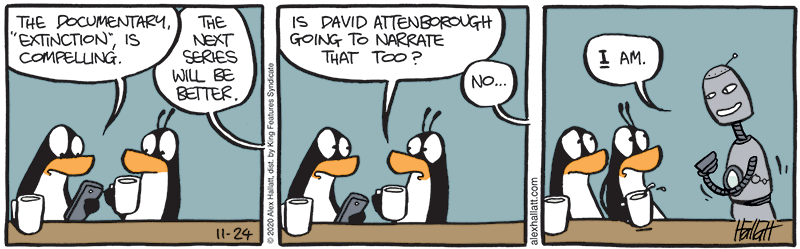

Those conditions brewed ominously in the background, but it wasn't until the new age of AI models such as ChatGPT and Copilot were released that the tipping point was finally reached. These systems are shockingly good at solving these well-constrained problem definitions. The vast amount of resources available on the internet has directly trained a lean, mean, interviewing machine. If you don't believe me, give it a whirl yourself. I guarantee you'll be surprised at how comprehensively it can solve these problems. Not only can these systems generate correct code, but they also provide human-readable explanations for the solution!

Rightfully so, my dear readers can point out that the ability to fraud your way through this style of interviews relies on candidates being dishonest. And with a dash of optimism, we can hope that this is the minority of candidates. And while this is hopefully true, the method of cheating was never so robust, accessible and foolproof. While we may not have to worry about floodgates of fraud, we should consider this a strong signal to rethink this process as a whole. The warning bells have been ringing for quite a while regarding the fallibility of these interviews. It's time to accept that they are not holding water, cut our losses, and move on.

The Final Fallout

The current AI models make silly mistakes every now and then, and they completely butcher things other times. But this article isn't here to convince you that they are perfect. Instead, we should be convinced that it's good enough to take the threat seriously. And the writing is on the wall, folks. If current systems can get through even 60% of your interview screening, that's a big enough danger to your hiring process to seriously reconsider how you screen talent. And the Large Language Model revolution is still in its infancy. Efforts are actively underway to create refined models which are specifically trained to break your interviews. These will be publicly available within the next year! Not only that, but we will see even more advanced models released over the next few years.

So as the training, releasing and refining cycle marches on, your interviews will get even more precarious. And this is not a problem that you will immediately detect. It's a slow poison that will enter your company as lackluster engineers infiltrate via ChatGPT Trojan horses. Or perhaps they will take the honest route, and simply be really good at Leetcode. But this is not indicative of the real world, and these engineers are not going to be helping your company excel and innovate. The frauds will cause you to bleed revenue in your expensive recruitment and onboarding pipelines. And the interviewers without the engineering skills will cause company-wide productivity to wane as other engineers waste cycles trying to onboard these poorly selected candidates. The end result is that your software is going to atrophy, as new bugs are introduced and team velocity stagnates.

Disaster Recovery

Like any good disaster plan, we need to focus on two separate aspects: how can we mitigate the immediate danger, and how can we rebuild long-term?

To mitigate the immediate risk, we can stop assigning take-home tests. These just open the door to more fraud potential. And while it's not feasible to immediately stop Leetcode questions; we can at least stop using copy/pasted problems from websites. The models have been trained on these problems already. Lastly, we should start to bias our interviews away from simply finding the solution, and instead focus on how the candidate worked towards the solution. To be frank, this should already be the case! But, sadly, for a lot of companies it's not. Interviewers need to be trained on how to read between the lines, how to probe for soft skills, and how to pick apart the difference between arriving to a solution and problem solving itself. Like a good novel, we want to see the struggle, not just the triumph.

Once the bleeding stops, we need to find a long-term solution. To do so, we'll need to drastically reconsider how we interview and field for talent. The main thing we need to change is the current focus on well-constrained problems. Even without the fraud itself, Leetcode-style interviews are prone to exploit by those who dump a bunch of hours into just memorizing solutions. This is probably not the talent that we want to hire. Instead, we want to hire problem solvers, out-of-the-box thinkers, excellent communicators and those with the ability to pivot quickly.

What exactly this style of interview might look like could be an entire article in itself. And perhaps it will be, if you pay attention to my blog. For now, though, I'll leave this as a thought experiment to the reader.

Good luck, and god speed!