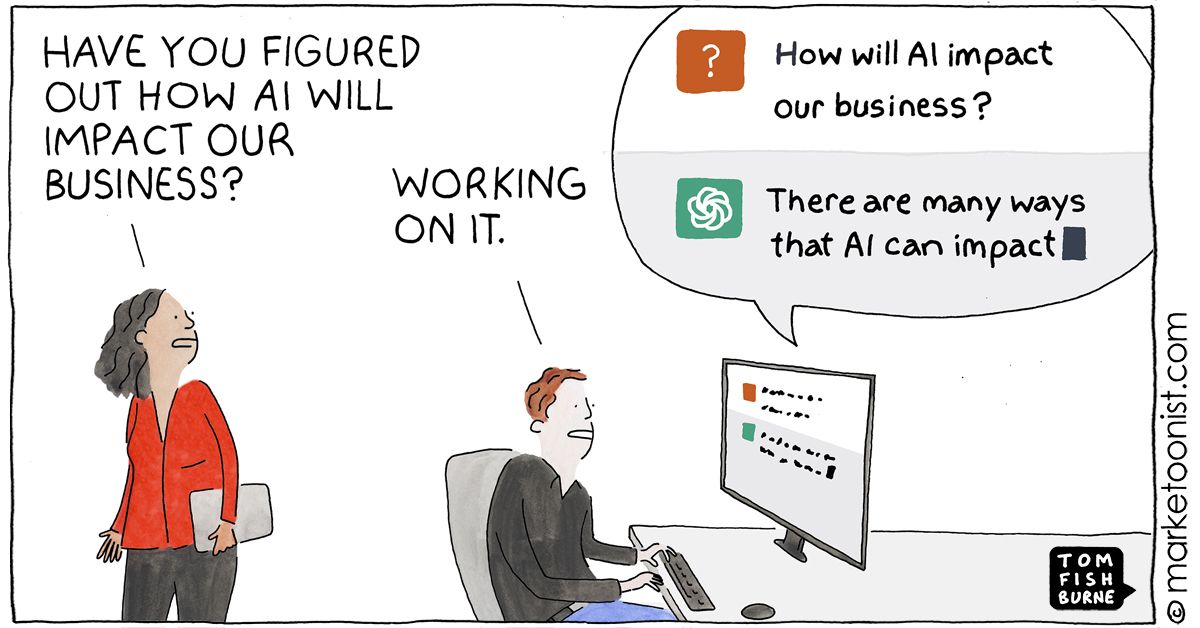

I've been working on a personal project, as a shallow excuse to play around with some of the impressive generative AI tools such as ChatGPT, Bard and GitHub Copilot. The main motivation, aside from my ever-present curiosity, has been to understand more about where they fit into my professional work and how they might impact the software engineering field.

There are many biased and dramatic opinions out there; from people dismissing ChatGPT as fancy auto-complete, all the way to folks claiming that these machines have reached sentience. As usual, the truth lies somewhere in the middle of these extremes. In this article, I'll provide a balanced analysis of the capabilities and drawbacks of these tools. I'll use that to frame how software engineering will be affected, and then discuss some ideas on how developers can future-proof their careers by adapting to this revolutionary change.

What are the best parts?

One clear area where these systems excel is answering incessant streams of questions. Without fear of looking stupid, one can continually and unabashedly pester them. And for most popular technologies, it's like having a very patient senior engineer at your beck and call. Generative AI systems are syntax wizards, have memorized the entire standard library, know the idiomatic way to program, and are phenomenal at explaining obfuscated code snippets.

But the fun doesn't stop there! Aside from just reading and explaining code, many large language models (LLMs) can also write code. However, while they may be senior engineers of syntax, they are more comparable to a junior developer when it comes to authoring that code. If you give them a problem that's well-defined and small in scope, they can usually succeed. But like any fledging developer, one also needs to apply some additional scrutiny to the code they produce. Fortunately, these models are very receptive to feedback! By simply letting them know that an API is deprecated, or that they have a bug in their solution, they will nearly always identify and fix the problem.

So, these powerful LLMs are capable of both reading and writing code. But the hype train doesn't stop there - they can also correct code! One of the more useful ways to leverage these systems is in the form of small-scope code reviews and bug hunting. They are amazing at taking little chunks of code, such as functions or classes, and spotting bugs or offering improvements. As an anecdote, I was working on a class which interacted with the Google Maps SDK, and noticed some rather odd behavior with the markers I was adding to the map. I couldn't figure out what was going on, so decided to take a shot in the dark. I copied the entire class, pasted it into ChatGPT's interface and simply stated "there's a bug in this code, what is it?" To my shock and amazement, it immediately pointed out the problem. I was calling an API function which reloaded map markers, but the API documentation specified that after making such a reload, I needed to then explicitly let the renderer know the underlying data had changed. Wow!

My takeaway has been, for well-documented APIs and languages, ChatGPT really is a powerhouse of knowledge. Its ability to apply that training knowledge towards real-world code is equally impressive and anxiety-inducing.

What are the worst parts?

Despite the technical acuity of generative AI, there are still many flaws to find. This section will not focus on nitpicking the smaller, implementation specific details. Many of those are actively being worked on, and are not worth spending considerable time thinking on. Instead, we should be considering the more systematic flaws - those which are unlikely to be addressable in the near future, or perhaps may even be fundamental to the technology itself.

One such example is in being consistent. With code generation in particular, the same prompt can result in large variations in output quality. Which makes sense, as these systems don't have a strong concept of what makes one solution better than another. While this will improve as the feedback loop helps train the models, the problem will always exist with less generic prompts. A related flaw to point out is a result of the training process itself, which often lags behind current trends. This is most frequently evident in the models using outdated syntax, deprecated APIs, or third-party dependencies which are no longer favored.

While these are indeed issues, one could point out that many human programmers often fall into the same problems and biases.

Another potent flaw becomes apparent when you zoom away from the finer details and gaze into the problem-solving abyss. A critical place where these models cannot effectively operate is broader-scoped system designs. As an example, they rarely can make sensible judgement calls about when to leverage multiple CPU cores in a solution, or when to perform an IO operation in-memory versus buffering data to the disk. And throughout all of this, they do not have a concept of implementing systems in a user-friendly way. Handling edge cases, sporadic inputs, or gracefully backing out of operations are all areas that still require a human touch to consider and coordinate.

Lastly, I'd like to address the ongoing criticism of so-called hallucinations, in which LLMs completely fabricate information. While this was an obvious flaw in ChatGPT 3.5, the subsequent versions (4.0+) have mostly fixed it. Unfortunately, popular opinion has not yet caught up with this reality, and the topic of hallucinations remains a very common talking point. A wise reader would be encouraged to view this problem as relatively transient. While it will never be completely fixed, it will rapidly improve to the point of being a non-issue.

How will this impact the industry?

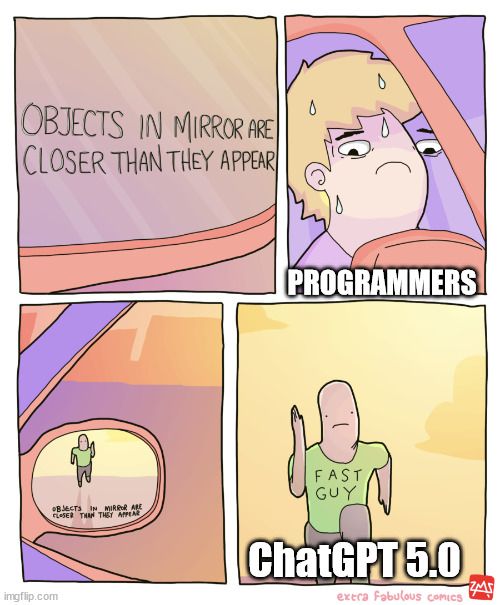

LLMs are one of the largest shifts in software engineering that has occurred since the adoption of object orientated programming. When considering the impact, it's important not to focus too heavily on where things are in the present moment. Instead, it would be astute to consider where they will be as the technology continues to mature. Generative AI will only get better in the coming years, as will the tooling and applications built around it.

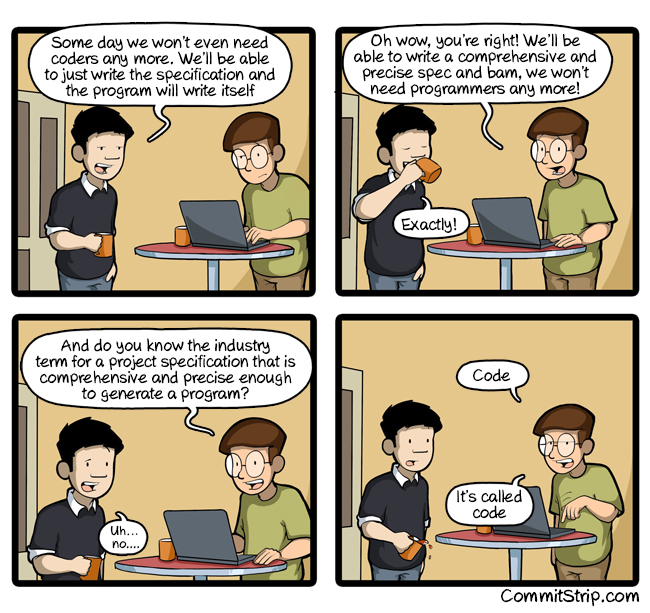

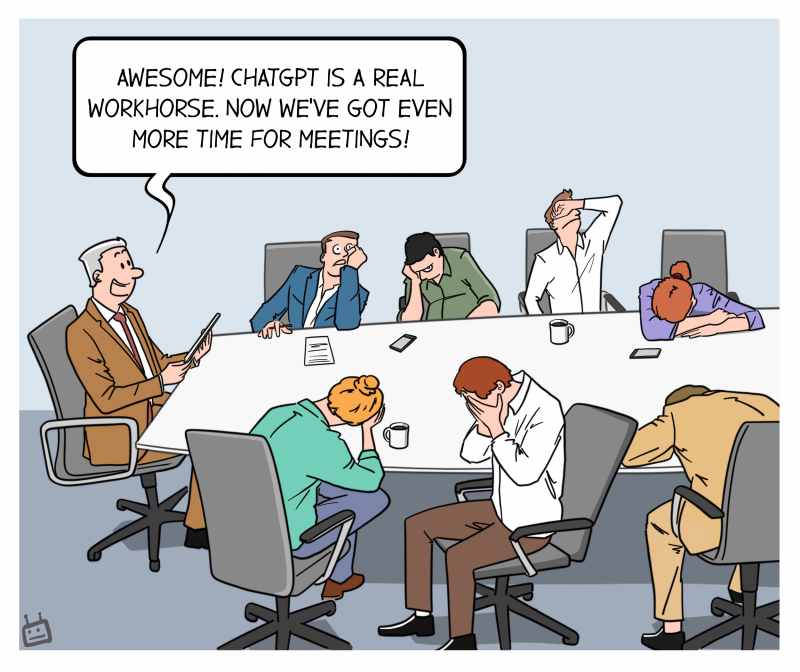

There will be two main industry impacts from this shift. The first is through increasing the productivity of engineers. From bug hunting to boilerplate code, developers will be spending less time writing and more time designing. This, in turn, will lower the barrier of entry for aspiring professional developers. With these tools reducing the feedback loop and hair-pulling frustrations of debugging, it does not take a psychic to realize that programming will become more accessible to an even wider audience of talented individuals.

The net result of these changes is that demand for software engineers will come back down from the stratosphere, where we have been happily hanging out for the past few decades. These technologies won't abolish our careers, but they will lower the average salary back towards a more typical knowledge-worker range.

How can you adapt your career?

Generative AI is unlikely to completely replace a talented software engineer. But another engineer effectively using generative AI might! Professional developers should be paying close attention to these tools, while also understanding and working around the drawbacks. They are fantastic launching points, but ultimately still require someone to provide the input, and then someone to utilize the output.

As these tools grow more complex, their ability to write code will continue to get better and better. As such, developers should start focusing on growing their skills that lie outside of raw code creation. Part of that is honing technical skills such as reviewing code, making architectural decisions, and bug hunting. All of these skills will become more in-demand as maintaining and evolving code becomes more important than writing it directly. Engineers should also begin to invest in skills that are closely adjacent to the field. While getting an MBA is overkill, it would be useful to learn more about project management, human computer interaction, requirements gathering, and software testing or validation. Knowing more about these fields will enable engineers to effectively work on the bigger-picture vision, which helps guide the finer details.

For those that want to continue writing code, these systems will not impact all software disciplines in a uniform way. So if you are especially worried about being automated out of your job, you could instead choose to focus on software which is less likely to be largely impacted by generative AI code creation. Some examples include embedded systems, robotics, or virtually any cutting-edge technology. Conversely, you could avoid the disciplines which will be rapidly impacted. Some examples are existing low-code web development projects, and simple CRUD backend work. A litmus test to consider is how often you are solving unique and novel problems, versus how often you are simply searching and applying known solutions from the web. The latter is what these new technologies do best.

Conclusion

Generative AI tools such as ChatGPT, Bard, and GitHub Copilot are undoubtedly remarkable in their capabilities with software-related tasks. From answering questions, to writing and correcting code, they are proving to be an invaluable resource for programmers. And as this technology matures, we can expect them to become even more transformative (pun intented). Rather than fighting against them, or even worse ignoring them completely, it's important to take a pragmatic approach. Embrace them, and evolve your skills as technology evolves itself.

Programming is just one small tool in your toolbox. Engineers are not valuable because they can type on a keyboard, they are valuable because they can take complicated problems and ambiguous requirements, break them down into bits, and construct something which provides value to the world.